The Infinite Scroll Trap

Modern digital interfaces are engineered for addiction. Infinite scroll algorithms leverage variable reward schedules to keep users in a state of passive consumption, often leading to “Doom Scrolling”—a compulsive behavior that negatively impacts mental well-being and time management.

Modern interactive rituals often demand high cognitive attention—constant notifications, infinite feeds, and bright screens. The opportunity was to design an object that exists in the “periphery” of our attention, providing comfort without demanding interaction.

Social Robotics as a Nudge

Drawing from “Social Tech” frameworks and soft-robotic research, the approach focused on non-verbal communication. By studying how humans comfort each other through physical presence, I developed a system that uses breathing as a primary feedback loop.

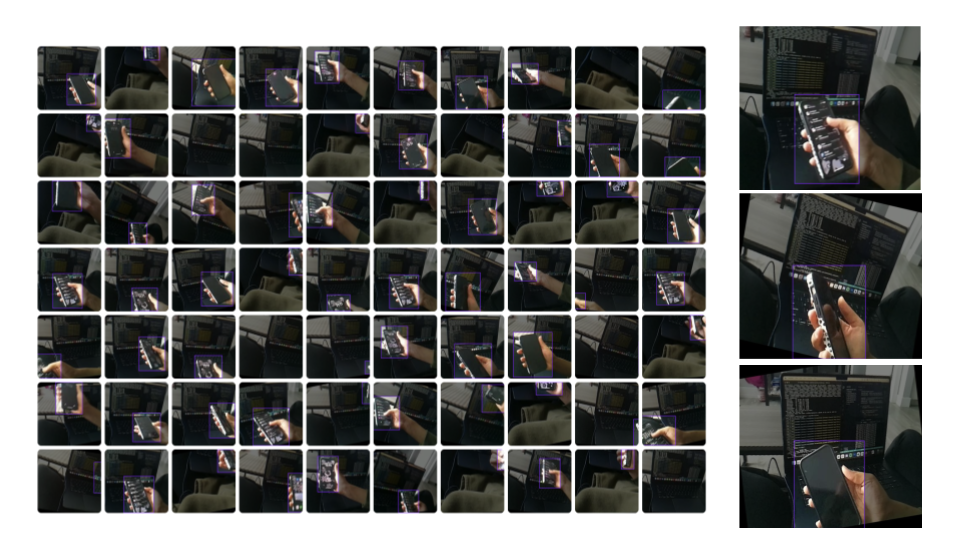

Engineering the Vision System

Developing a robust detection system required moving beyond off-the-shelf solutions to ensure both accuracy and privacy. We engineered a custom small machine learning model specifically trained to identify the “Doom Scroll” posture—a unique combination of hand positioning and phone orientation. By curating a dataset of over 400 images using Roboflow , we fine-tuned the computer vision algorithm to distinguish between active phone use and passive scrolling. This targeted training approach allows the system to intervene only when necessary, minimizing false positives and ensuring the robot’s reactions feel intuitive rather than intrusive.

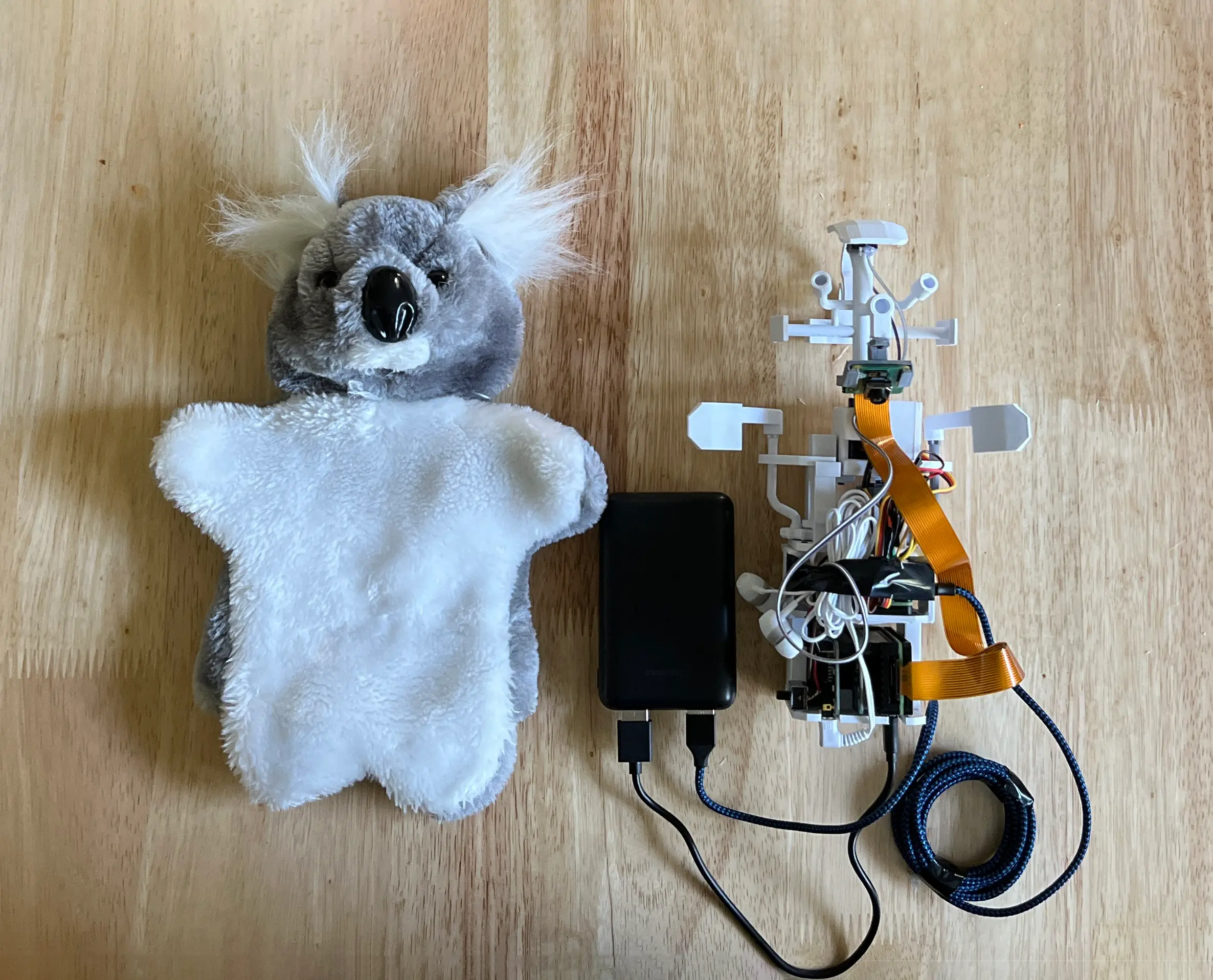

Piko's Anatomy

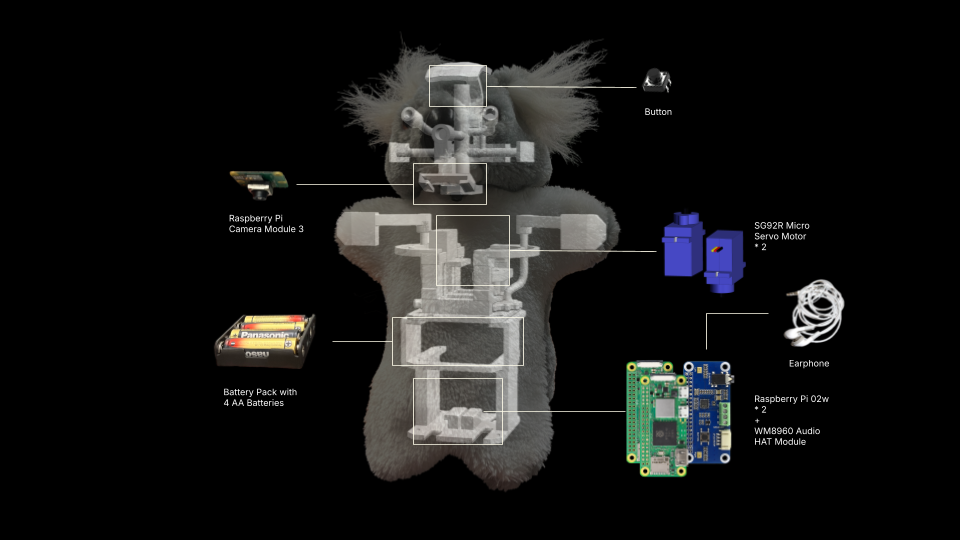

To bring Piko to life, we needed a hardware architecture that could support real-time vision, complex motion, and audio processing within a compact, huggable form factor. The internal chassis is a custom-designed 3D printed skeleton that houses the distributed computing system.

At the core of Piko’s awareness is a Raspberry Pi Camera Module 3. This wide-angle sensor provides the raw visual data needed for the “Inside-Out” detection model, allowing the robot to see the user’s hands and phone without capturing facial data.

Processing power is split to handle the load. A Raspberry Pi Zero 2 W acts as the central nervous system, managing the logic and communication. It is paired with a WM8960 Audio HAT, which drives the onboard speakers and captures ambient sound, enabling Piko to “speak” and react audibly to the user’s behavior.

Two SG90 Micro Servo Motors control the arm and head movements, allowing for gestures ranging from a gentle “wave” to an emphatic “clap.” These motors are orchestrated to create fluid, organic motion that mimics biological responses rather than robotic jerks.

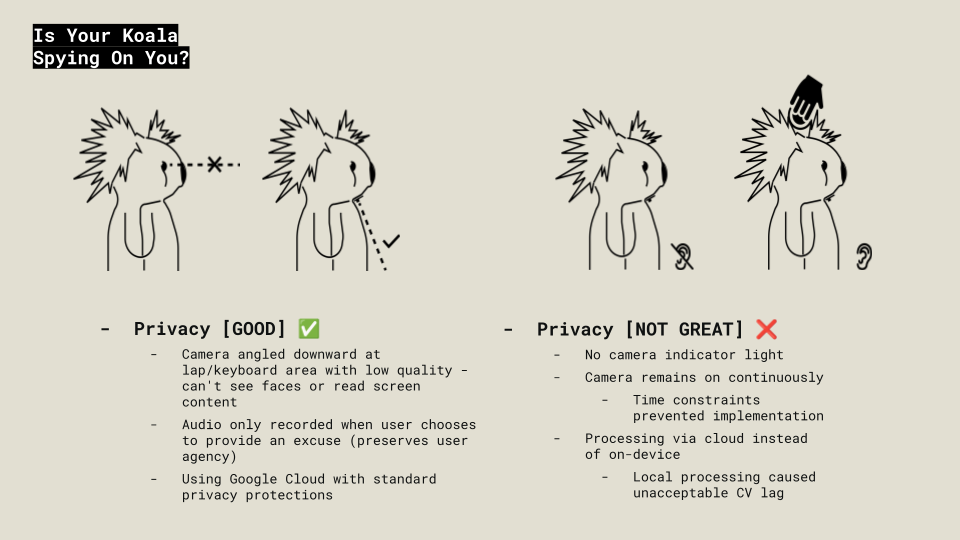

Building a robot that “watches” you requires rigorous ethical boundaries. We addressed this by embedding privacy directly into the physical form. The camera is strictly fixed downward, focusing solely on the lap area to detect phone usage without capturing faces.

To prevent intrusive monitoring, verbal interaction is physically gated. The robot remains silent until a manual “Tap-to-Speak” button is pressed, ensuring the user explicitly invites audio feedback. Furthermore, a “Double-Tap” instantly engages “Quiet Mode,” providing a hardware-level kill switch that empowers the user to silence the companion during deep work sessions.

However, the prototype reveals ethical gaps. To achieve real-time responsiveness, it currently relies on cloud processing rather than on-device computation, and lacks a hardware indicator light for the camera. A production-ready iteration would need to shift all Computer Vision processing to the local device (Edge AI) to ensure that no visual data ever leaves the robot’s chassis.

We developed a small library of physical gestures to translate the system’s logic into social cues. Using the servo array, Piko moves through four distinct emotional states: Curiosity, Joy, Shyness, and Anger. These non-verbal signals allow the device to critique digital habits without being intrusive, leveraging our natural empathetic response to biological motion to break the user’s focus loop.

Technical Architecture

The architecture bridges hardware sensing with behavioral responses. The robot doesn’t just “breathe”; it reacts to how the user holds it, adjusting its pace to match or soothe the user’s own rhythm.

To achieve low-latency interactions, we distributed the workload across a three-node ecosystem. This split architecture balances heavy processing with real-time responsiveness.

Handles the computational heavy lifting. It runs the Computer Vision model to detect “Hand + Phone” triggers and broadcasts events instantly via MQTT.

Manages logic and personality. It listens for triggers and orchestrates the response, calling Google Gemini 2.0 Flash for audio and Pushover for phone notifications.

Dedicated to motion control. It isolates servo noise and power draw, receiving commands to execute specific emotional gestures like “Angry” or “Curious.”

Project Demo

A walkthrough of the fully functional prototype, demonstrating the computer vision detection triggers and the robot’s emotive feedback loops in real-time.